What Are You Looking For?

Discoveries Made Through a Library Website Usability Study

Abstract After providing input on the development of a new website for the University Libraries, the librarians at Murray State University decided to do a usability study to determine how well the new design supports the research and information needs of its primary users, students and faculty, before switching to the new website. A usability study group embarked on a process of learning, implementing, and discovery. This article walks through the implementation process and details how we prepared for the study, implemented it, and what we learned from the participants about how our library website is used and how to improve the design to better support the needs of the users. We share some findings that are broadly applicable for improving library websites as well as some surprises we discovered.

Background

Murray State University (MSU) is a four-year public liberal arts institution located in far-western rural Kentucky. Our combined undergraduate and graduate enrollment hovers around 9,500 students. The University Libraries consist of three libraries: a main library, a special collections library, and a law library that is now closed but still houses materials that can be requested.

When we began the project to update the University Libraries’ homepage, it was six years old. While this isn’t terribly old for a library website, it was old enough to know better. The update process at the time was neither ideal nor consistent. We had to rely on our institutional web developers to make changes on our behalf (and we hated to ask!). Additionally, the old homepage had some design flaws that needed to be addressed. The hierarchy was indeterminate, and it lacked organizational context. There was no persistent navigation bar (either a header or footer) from the homepage to the LibGuides pages which contained the content behind the homepage. Finally, we were concerned with whether the terminology was a help or a hindrance.

By creating a homepage using LibGuides, we could shift maintenance and upkeep to the library which would be mutually beneficial to the library and IT department. It allows in-house control of the homepage while unburdening the University Webmaster. However, the University Webmaster does continue to serve as an informal advisor/source of support for the Special Projects Coordinator, who now manages the website.

Before initiating this shift to in-house control of the homepage, we took stock of the advantages and challenges this shift would bring. We knew this would allow the homepage to be more dynamic. It would allow us to evolve our site as our user’s needs evolve, and as our collections evolve. Additionally, it would enable us to be more flexible in tailoring pages/groups of pages related to the entities under the Libraries’ umbrella (i.e., Writing Center, Special Collections, Makerspace, etc.) Updates and corrections could be made quickly, and we could promptly address issues (technical and otherwise) from a library perspective and platform. Finally, we could get more value from the LibGuides CMS that we were already using by embracing that potential and we would also have the added bonus of the Springshare support and community.

However, while the Special Project Coordinator had served as the LibApp administrator for several years and was comfortable with configuration and functionality, she was not a front-end developer and had no programming training or knowledge. In order to create the homepage, she needed to teach herself coding within the LibGuides environment. This involved repeated binge-watching of Springshare video tutorials on Bootstrap, getting more in-depth with custom HTML and JavaScript/CSS, and sending many “please help me” emails to both Springshare and MSU Information Technology (IT) support. An additional challenge would be ensuring we have a plan and workflow in place to notify our users in the event there is a LibApp outage and training additional personnel to be able to update and troubleshoot.

If you are exploring the possibility of developing a customized homepage via LibGuides but don’t know where to begin, the following are lessons learned that may help make your project more manageable:

- Curate a variety of training resources. There are a lot of training materials on the Springshare site including LibGuides customization tutorials, webinars, and knowledge base articles on Bootstrap, HTML, and JavaScript/CSS. There are many websites devoted to coding instruction and UX design/accessibility, as well as other academic libraries’ guides and presentations about how they customized their LibGuides, which are great. It’s like learning a new language.

- Know your limits, but also push them. If you see something you like, it’s most likely doable. Be patient and keep building your knowledge and skills to the point where you begin to understand this new language. While working to synthesize the elements we liked, the Special Project Coordinator had to work within her skill set at the time — what she could actually accomplish as a self-taught beginner. Start simple then evolve elements as you learn more.

- If your organization has an IT department or web team, reach out proactively to determine how they need to be involved. Let them know your plans and ask for feedback and help. The Special Project Coordinator worked with the University Webmaster to make sure our homepage blended with the rest of MSU’s branding, and MSU’s IT had to tinker with some configurations in the back office of the University’s main site as well to ensure a smooth transition to the new homepage.

Appendix 4 includes a collection of resources to assist with a homepage customization process.

Timeline

The redesign of the Libraries’ homepage took place over a 15 month period beginning in October 2021 with an internal review and survey of nine academic library homepages built using LibGuides. A group of library faculty and staff gave feedback about these pages and options available on them. The pages we most liked were those where simplicity and clarity were the main attraction, and that, as one reviewer mentioned, didn’t “look like” a LibGuide. Between October 2021 and February 2022, the Special Projects Coordinator synthesized that feedback into a draft homepage for the group to review and critique.

In May 2022, the Usability Testing Group was formed consisting of two Research & Instruction Librarians, the Metadata Librarian, and the Special Projects Coordinator. Over the summer, the Usability Testing Group determined the scope and designed the usability study and, in August, invited members of the campus community to participate in the study. Participants were selected and the usability study was then conducted during September and the beginning of October 2022. After the Usability Testing Group compiled and reviewed the results of the sessions, the Special Project Coordinator revised the homepage and other site pages; our go-live day was January 9, 2023.

We embarked on the redesign motivated by the goal of having the same in-house control of University Libraries’ homepage as we do over our LibGuides. We wanted to be able to keep our homepage fresh and engage more with our users. Once we got into the process, we thought it would be beneficial to our users and ourselves to conduct a usability study before going live. Our hope is that this change positively impacts how users perceive our integrity, reliability, and responsiveness.

Design

None of the members of the Usability Testing Group had any training or experience with UX design or testing. The design and implementation of our usability study benefited greatly from Atla’s Usability Testing course available through the Atla Learn (https://learn.atla.com/) platform. We started with a planning document that walked us through the process of determining the scope, logistics, users and tasks, recruiting, and other details that we might not have considered otherwise.

We approached this project with the vague idea that we wanted to get feedback from users on how useful the new design is to their needs. Intentionally determining the purpose and scope helped us refine what areas of the site we wanted the testing to cover and which user groups we needed feedback from the most. As we moved forward in further development of the study, the purpose and scope helped guide decisions about participants and task creation.

We wanted the usability study to focus on navigation and findability and to ensure that the labeling was meaningful and helpful. We identified the concerns, questions, and goals for the study as:

- Can users navigate to important information from the library homepage?

- Are users able to find operational information (hours, phone numbers, policies)?

- Can users find and access specific resources and services?

The next part of the process of designing the usability study was to determine the logistics of the study. Logistics relate to the who, what, where, when, and how aspects of the study.

Developing the purpose and scope helped us focus on which user groups would participate in the study. Since students and faculty are the primary users of the website, their feedback was crucial to determining how useful and helpful the new design was.

Next, we needed to determine what areas of the website we were focusing the testing on and the type of information we wanted to elicit from the participants. We wanted the participants to interact mostly with the newly designed homepage and to determine how easy it was to navigate. We also wanted the usability study to mimic, as closely as possible, the ways participants access and use the website normally.

We debated whether we wanted to have a dedicated space in the library in which to conduct the usability studies but decided to use Zoom as it would allow us to record each session, observe the participant’s screen while they were doing the tasks without being too intrusive, and the participants could do the study from the comfort of their home, office, or dorm room. This minimized the amount of artificial noise that could influence and affect the outcome of the study.

The timeline and length of the study will often be dictated by your schedule, the academic calendar, and possibly an external deadline. We absolutely did not want to launch the new website in the middle of the semester, so we needed to start and complete the usability study in the fall with the goal of a soft launch at the beginning of the spring semester. In January 2023, the new homepage was released with a link to the old homepage. Then in May, at the end of the semester, that link was removed. Another determination is how long each usability test will be. We limited each test to an hour.

How the usability study is conducted is the critical part of the process that facilitates the feedback you want to obtain. This article will go into more detail about methodology and task development later. Another logistical detail pertains to participants. It is helpful to offer an incentive for participating in the usability study. This signals the value of the participant’s feedback as well as compensates them, though quite modestly, for their time commitment. In our study, each participant received a gift card to the campus eateries and were also included in a drawing for two camp chairs (which were donated by a vendor).

Next, we circled back to the “who?” question and determined the roles that each member of the Usability Testing Group would play in the study: facilitator, note taker, and observer.

An important part of the design process is developing personas. A persona is a representation of a typical library user; in the case of a usability study, the personas represent people in the user groups that fit into the scope of the study. They turn the abstract concept of “user” into a person with thoughts and emotions, intentions, and challenges. At least one persona should be developed for each type of user, and you can develop as many as you want and have time to develop. We developed multiple personas for each user group (undergrads, graduate students, and faculty) based on the types of users within each group.

Personae aren’t pulled out of thin air but are developed and informed using demographic data as well as other data available from the library and the institution. Giving each persona a name helps to make them more life-like. Fill out the personas with details you know from your interactions with students and faculty. Some of the elements we included in our personas were which program or college they were in, how many years they had been at MSU, if English was their first language, what access they had to technology, and their comfort level related to digital literacy. Elements specific to students included whether they were residential, commuter, or at regional campuses. Elements specific to faculty included whether they were tenured or tenure-track, adjunct, or instructor. The most interesting part of the personas entailed thinking about and describing their needs as related to library resources, personality traits that might influence their approach to and use of the library and its resources, and their current feelings related to the library and research.

Developing our personas was useful for helping us understand the needs that drive library website usage and the feelings that users approach library websites with which affect their usage of the website. After developing personas, we then developed tasks that were derived from the needs explored in the personas.

When developing the study, one of our early goals was to create tasks that participants could complete using the website. The task creation process included three phases: brainstorming, differentiation, and creation. In the brainstorming phase, we began discussing common tasks and information that students and faculty may come to the library website for. We determined that library users would navigate to the library website to conduct known-item searches, contact a librarian, request an interlibrary loan, search for scholarly sources, look up how to cite sources, or look up information for specific locations or services within the University Libraries (i.e., Writing Center, Oral Communications Center, Special Collections). These tasks were supported by our own experiences, in addition to data regarding frequently asked questions via library chat, emails to librarians, and at the information desk.

We then differentiated tasks between the student and faculty user groups, highlighting the fact that overall, they would have different needs and purposes for using the site. When it came time to select the tasks that participants would complete, it was important to determine how many tasks to include that would fit within the hour time frame and provide sufficient data. We also wanted to include some tasks that could be completed using different paths or functions, depending on the participant’s preferences. These tasks are included in the scripts for the facilitator to read to ensure consistent implementation of the study for each participant. (See Appendices 2 and 3 for the scripts.)

Since we were using human subjects in the study, we submitted an application to the Institutional Review Board (IRB) and created an informed consent document. The IRB determined that our study did not need IRB oversight. However, it is recommended that any study involving human subjects be submitted to the institution’s IRB for approval before proceeding. We still used the informed consent document as good practice.

Once we had approval from the IRB, we were ready to implement the study. For that we needed to recruit participants. We wanted to include both frequent library users as well as those who never darken the Library’s door in the study so it was important to broadcast the information about the study as widely as possible. We used all of the typical ways of communication already in place such as the Library’s social media pages, emails, and the University’s weekly electronic newsletter. These all included a link to the electronic survey form (See Appendix 1). We also handed out paper surveys in the Library, added the survey link on our current homepage, and posted a QR code to the survey at the information desk in the Library.

In addition to name and contact information, the survey included questions that provided information we used to select participants and included some diversion elements so that those who filled out the survey wouldn’t try to self-select. The selection criteria included the degree program they are in, how often they use the library, and what type of device they use. Diversion elements included activities they have used the library for and categories in the degree program that weren’t relevant to our study.

In addition to selecting participants from each user group (undergrads, graduate students, and faculty), we tried to include people who represented a variety of disciplines, ranks, and frequency of library use. We also tried to include users who accessed the library’s website from a variety of devices or systems.

Research on usability studies indicates that no more than five participants are needed in a usability study for the results to be representative. In addition to the five participants from each user group, we selected an additional participant from each user group as part of a pilot study intending to use the results from the pilot studies to refine the tasks and process. After sorting through 51 completed surveys, we had 18 total participants in the usability study.

We then contacted the selected participants to schedule a day and time and sent them a Zoom link for the study.

Implementation

Having participants connect via Zoom was the session modality that best fit our needs. We discussed the pros and cons of this method compared to having participants come to the library and use the website while we were in the room with them. We determined that to best inform our study, it was important to be able to see participants’ screens and observe their cursor, which could be achieved by using Zoom’s screen share feature. Additionally, we were able to record the session and review the recordings if we needed to. Also, we didn’t have software for tracking cursors or eye movements, which are two other ways of gathering data for the usability study of websites, but Zoom software is ubiquitous.

The overall flow of a usability session consisted of participants connecting via Zoom, briefly explaining the purpose of the study, giving them tasks, and asking follow-up questions.

Tasks could be given one at a time, either verbally or in writing in Zoom’s chat, or we could have given all of the tasks at once either in chat or through a link to a document. We decided to give tasks one at a time verbally, so they wouldn’t be distracted by the other tasks and the observers would be able to differentiate between tasks in assessing website functions. If they needed the tasks repeated, we would type the task into the chat feature. The facilitator asked participants to think aloud while completing each task so that we could gain feedback about the site. This think aloud feedback was the primary and most important feedback about the usability of the website, so it was crucial to the purposes of our study. However, due to the unnaturalness for most people of thinking aloud while working on a task, it was quite challenging at times to gain this feedback.

Many participants commented on their concern for not doing the task “right.” The facilitator reassured participants that we were not so much interested in whether or not they were able to complete the task but in how the library website either helped or hindered their ability to complete the task.

After each task the facilitator asked three follow up questions:

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

The facilitator typically asked participants to elaborate when they gave scores that were in the 2-6 range, as that indicated that there was some nuance to their positive or negative response.

We decided to not debrief any further after each task (although that would have been an option) as we didn’t want that debrief to influence how users approached future tasks. Other than to elicit active feedback, the only time the facilitator intervened was when participants left the website completely or otherwise were no longer completing the given task.

After the participant completed all the tasks, the facilitator provided them with a list of adjectives, and they were asked to choose up to three words that best represented how they felt about the website after working through the tasks.

Analysis

In analyzing the feedback from the usability studies, one of the first parameters we looked at was how participants felt about the new interface overall. To this end we took the adjectives that each participant was asked to provide at the end of the session and performed a frequency analysis. We found that the majority of the terms chosen were positive. The most common comments were related to the clean, professional look with lots of white space and the intuitiveness of the interface. On the more critical side we also received multiple comments that while the page itself looked clean and simple, the interface also seemed complicated or busy. This was likely due to the number of options in the menus.

A second analysis we made was a first click analysis. This type of analysis is important as research shows that participants who click the correct link the first time will complete their task successfully in a majority of cases while participants who chose the wrong path are significantly less likely to eventually succeed at the task. To perform this analysis, the observers noted the first link that a participant clicked on during each task. They disregarded any cursor hovering, expanding drop down menus, or comments made and focused specifically on when the participant first navigated away from the homepage. The results helped us understand how the participants interpreted certain navigational and design elements, as well as provided insight into how some terminology could be confusing.

The first click analysis showed that navigational preferences varied between groups; undergraduates were much more likely to search for an article using the article specific tab in the search bar while faculty either gravitated directly to the databases or used the general function of the search bar. We also found that graduate students and faculty were much more likely to correctly and easily navigate to the interlibrary loan page while undergraduates struggled with the task, likely due to not understanding the library jargon.

Lastly, we looked at the notes provided by the observers as well as statements that participants made during each session. As indicated before, some participants thought aloud more readily than others, but we were able to determine that navigation preferences varied and so there was a need to accommodate those preferences.

There was no distinct preference between using the Quick Access Menu and the Dropdown menus so both needed to be available. Some participants gravitated immediately to the FAQs to complete the task while some faculty were inclined to leave the webpage entirely for certain tasks. We were surprised at some navigational choices made by participants and learned about some functionality of which we were not previously aware.

Due to the nature of the study design most of the data is qualitative rather than quantitative. This means that we focused largely on collecting insights into specific user behaviors through both observation and participant feedback during the session. We paid particular attention to comments and suggestions made by participants during completion of the tasks and prompted participants to describe their thought process if needed.

Though the design of our study was intended to gather mostly qualitative data, we did collect some quantitative data including the amount of time on task and whether tasks were completed successfully. Due to the small number of participants and some participants’ tendency to explore the site during early questions, we did not find this data particularly useful.

There are a lot of options for usability analysis, some of which we chose not to focus on. For example, we created our new homepage without conducting any wireframing and prototyping nor did we do any comparison testing with the old homepage since we knew we were sunsetting it. We also chose to focus on conducting moderated testing for more in-depth results and therefore did not conduct surveys related to the design.

Moving Forward

As we observed the participants of the study, it became apparent that a good deal of their difficulty in completing the tasks was due to deficiencies in our site beyond the homepage. While we knew there were areas that needed improvement, the usability sessions brought to light issues that we were not aware of. Part of this was due to our familiarity with library jargon and where things are located on the site. We needed the perspective of those who are using the website for the first time and are confronted with new terminology to help us understand how our website is hindering users from accomplishing their task. To that end, while the usability study gave us valuable feedback for the homepage design, it also provided us with information that will inform the revision of the entire website.

After the usability study was complete, a Website Implementation Committee was formed that is tasked with identifying both immediate homepage revisions based on the study as well as addressing longer-term website projects. This group is focusing on ways to streamline, better organize, and pare down content.

We immediately began an ongoing process of reconsidering library jargon. Some terms that were identified in the usability study and the terms we replaced them with include:

|

Jargon |

Better |

|

LibGuides, Research Guides |

Research Assistance or Help |

|

Interlibrary loan (ILL), Access Services |

Borrow from Other Libraries |

|

Circulation |

Borrowing, Check Out Materials |

TABLE 1. Jargon replaced with better terminology

Next, we added a navigation bar dropdown menu with action-based options: Now users can find How to Check Out Materials, How to Cite Your Sources, and How to Borrow from Other Libraries (Interlibrary Loan), among other options.

Since the FAQs were the starting point for many tasks, especially for the undergrads, we started overhauling and updating our FAQs area. We also adjusted the search box design to make the background image less busy and links more prominent.

All of this effort resulted in a library homepage that we have confidence helps users find the resources and information they come to the homepage to find.

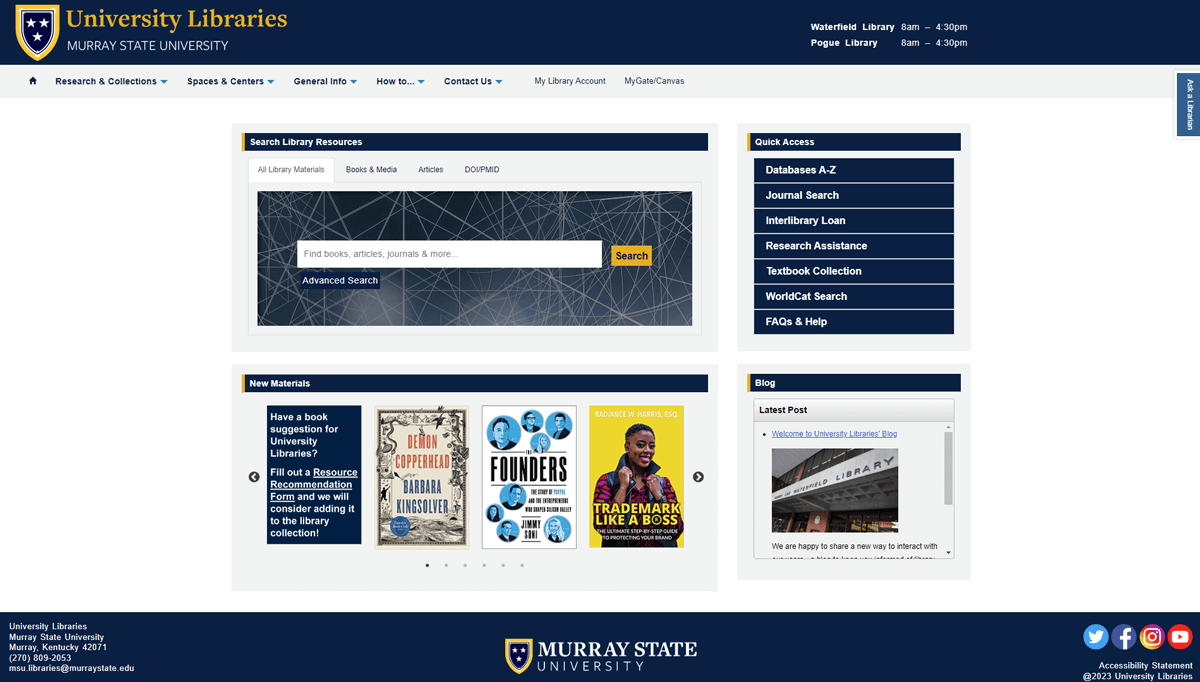

IMAGE 1 Newly revised MSULibraries homepage (https://lib.murraystate.edu/new)

Lessons Learned

Ideally, we would have liked to have more participants in the study. Next time, we could have a longer recruitment period or longer study period and find new avenues to reach potential participants.

Participants explaining out loud what they were doing and thinking during the usability sessions was essential to understanding if the homepage design worked. Asking more questions during this time from “Things a Therapist Would Say” might help illicit more feedback. For example, a suggested question is “can you explain what you are looking at or looking for right now?”

Something that librarians need to keep in mind is that the purpose of the homepage is primarily to assist with tasks or helping users find information as opposed to providing the information librarians want users to know. To that end, we need to streamline the content of our LibGuides, avoid duplication, and present information in a concise manner. In general, less is more.

It has been said before but it is worth repeating: avoid library jargon as much as possible. This would be a good talk-back board topic for students: putting library jargon words on the board and asking which ones they know to further our understanding of the roadblocks on our site.

Finally, we must continually strive to improve our universal design/accessibility.

References

“First Click Testing.” Usability.gov. July 7, 2023. https://www.usability.gov/how-to-and-tools/methods/first-click-testing.html

Krug, Steve. 2010. “Things a Therapist Would Say”. Rocket Surgery Made Easy: The Do-It-Yourself Guide to Finding and Fixing Usability Problems. http:// sensible.com/downloads/things-a-therapist-would-say.pdf

Libby, Allan Garrison and Joy L. Yaeger. 2017. “LibGuides As a Platform for Designing a Library Homepage.” Virginia Libraries 62, no.1 (September 14): doi: 10.21061/valib.v62i1.1463

Paiz, Gabriela. “50 Must-See User Persona Templates.” Justinmind. March 29, 2023. https://www.justinmind.com/blog/user-persona-templates/

Nielsen, Jakob. “Why You Only Need to Test with 5 Users.” Nielsen Norman Group. March 28, 2020. https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/

Shade, Amy. 2017. “Write Better Qualitative Usability Tasks: Top 10 Mistakes to Avoid.” Nielsen Norman Group (April 9). https://www.nngroup.com/articles/better-usability-tasks/

Appendix 1. Library Website Usability Evaluation – Interest Form

Screener Survery

We are recruiting participants for an upcoming website usability evaluation. Participants will be asked to use a website and provide comments and feedback about the website.

These in person or virtual sessions will use the Zoom conferencing platform and are being scheduled throughout September and October. Each session will last up to one hour.

If you are selected, as a thank you for your time, you will receive a $10 gift card for on-campus food service at the beginning of the session and be entered into a drawing to win camp chairs.

- Are you interested in participating?

- Yes

- No (thank you for your time, end survey)

- Do you have 1 hour to participate on a weekday during September or October?

- Are you okay with us recording your voice and your computer screen during the session? (This will only be used internally to help us understand your experience of using the website)

- Are you a student, faculty or staff? (redirect students to #5, others to #6)

- Student

- Faculty

- Staff

- What type of degree program are you in?

- Student-at-large/Non degree seeking

- Undergraduate

- Certificate program

- Masters

- Doctoral

- Other

- How often do you use the library in person or online?

- Never

- 1-4 times a month

- 5-10 times a month

- More than 11 times a month

- What activities have you used the library for? (check all that apply)

- Finding articles in databases

- Finding books

- Archival and special collections

- Quiet space for study

- Help from a librarian (research consultation, help using a database, etc.)

- Other

- What computer operating system do you usually use? (check all that apply)

- Windows

- Macintosh

- Unix

- Other

Name

Phone

Thank you for your interest! If selected, you will receive an email at the beginning of September with further details. Only those contacted and selected to complete the interview will receive the gift card.

Appendix 2. Usability Study Script (Student Participants)

Script for Student

https://libguides.murraystate.edu/new

Thank you for coming to this session today. My name is David Sye. I am a librarian and will be the facilitator for this evaluation.

The University Libraries is currently working to improve the design of the Library’s website. As part of that process we have asked a few people to help evaluate the new design. To do this, I am going to ask you to work through doing a few things using the library’s new website to get your feedback on what you experience.

- The purpose of doing this is to understand how well the new university libraries’ website meets the needs of users.

- The session is scheduled for 60 minutes and you will receive a $10 gift card to any of the campus food service locations for your participation.

- Your participation is entirely voluntary, and you may leave or take a break any time you would like.

Any questions so far?

With your permission, I will be recording the session with audio and video.

The data from your session will be used to help us figure out how to improve the website and the findings may be published. None of the data will be seen by anyone except the people working on the project and your participation in this session will be anonymous.

I am going to send you the consent form in the chat window. Please take a few moments to review the consent form and let me know if you have any questions

We are ready to get started. The first thing to do is share your screen with me & I will start the recording. This is so I can watch what you are doing in the browser.

The share screen button should be bottom center of the Zoom screen

(CLICK THE RECORD BUTTON)

I am sending you another document in chat — https://docs.google.com/document/d/1EFpSzbpET9raMm4W0H7VIGg-yLMI-I00ZQRTq1x6_jY/edit?usp=sharing

Please read the “Thinking Aloud” explainer out loud to me.

[They read “Thinking Aloud” explainer]

As you are working, think out loud and describe what you are looking at, what you are looking for on the screen, anything that is confusing, anything that surprises you, or even things you wonder as you use the site. Keep up a running monologue as you use the site, like a stream of consciousness, and anything you say will be helpful.

- Tell me if there is anything on the website that doesn’t make sense or that you think is particularly easy to understand.

- Also please do not worry that you are going to hurt my feelings. I am doing this to improve the site, so I need to hear your honest reactions.

- I will be taking notes as you work.

- I won’t be talking with you much because the more I talk with you, the more I may impact what you do.

- The situation is unnatural and can feel awkward, but please try to work as if you were alone, and do what you would normally do.

- AND Remember, I am not evaluating you in any way. Watching you helps me evaluate the system.

- There is no wrong answer and you can’t do anything wrong here.

I will give you the tasks one at a time. There is no need to rush.

Go as far as you would if you were alone. When you feel you have completed a task or you want to stop doing it at any point, please tell me. Say something like, “I am finished,” or “I want to stop this task here.”

Do you have any questions?

- I will read you the task. Please let me know if you need me to repeat it. If you would like a written version of the task I can send that to you in chat.

- Please use the link in the chat to go to the website. https://libguides.murraystate.edu/new

- Remember, we are not so much interested in whether or not you complete the task but in how the library website helps or hinders your ability to complete the task.

[Read through tasks]

- Determine if the library has access to the book Harry Potter and the Chamber of Secrets and indicate to the facilitator how you would get it.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Locate an article on the topic of media literacy.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Find a research guide related to your major.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Check to see if there is an online textbook available for a class you’re taking this semester.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Find the direct phone number of a staff member in the circulation department.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Request a resource that’s not available in the library

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Where is the Curriculum Materials Center located?

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Where is the Pogue Reading Room located?

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Find information about scheduling an appointment in the Oral Comm Center.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Determine how many books a [undergrad/graduate] student can have checked out at the same time.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Locate information for how to cite a book in APA format.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- [Ask for any comments on the activity and/or ask if anything was particularly easy or difficult.]

- [Ask specific questions about what you noticed/noted down - may want to save this till the very end to avoid leading them for the next tasks.]

- Thank you so much for helping out today.

- Click the button to stop sharing your screen.

- I’m going to share my screen. On it you will see a list of adjectives. [share screen]

- Choose up to 3 words from the list that best represent how you feel about the website after this activity (see Table 2).

- The purpose of doing this is to understand how well the new university libraries’ website meets the needs of users.

- The session is scheduled for 60 minutes and you will receive a $10 gift card to any of the campus food service locations for your participation.

- Your participation is entirely voluntary, and you may leave or take a break any time you would like.

- Tell me if there is anything on the website that doesn’t make sense or that you think is particularly easy to understand.

- Also please do not worry that you are going to hurt my feelings. I am doing this to improve the site, so I need to hear your honest reactions.

- I will be taking notes as you work.

- I won’t be talking with you much because the more I talk with you, the more I may impact what you do.

- The situation is unnatural and can feel awkward, but please try to work as if you were alone, and do what you would normally do.

- AND Remember, I am not evaluating you in any way. Watching you helps me evaluate the system.

- There is no wrong answer and you can’t do anything wrong here.

- I will read you the task. Please let me know if you need me to repeat it. If you would like a written version of the task I can send that to you in chat.

- Please use the link in the chat to go to the website. https://libguides.murraystate.edu/new

- Remember, we are not so much interested in whether or not you complete the task but in how the library website helps or hinders your ability to complete the task.

- Locate a resource related to your area of research.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Request a resource that’s not available in the library

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Determine how many books a faculty member can have checked out at the same time.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Check to see if there is an online textbook available for a class in your college/department.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Determine how far ahead you should schedule a classroom before you need it.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Locate information for how to cite a book in APA format.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- Determine what recording equipment is available from the library.

- Thank you, that was very helpful.

- I am now going to ask you 3 quick questions about the task you just completed.

- Please rate the task you just worked on a scale of 1 to 7, with 7 being the most positive answer and 1 being the most negative answer.

- How easy or difficult was the task with 1 being difficult and 7 being easy?

- How satisfying was it for you to do the task with 1 being unsatisfying and 7 being very satisfying?

- How confident are you that you completed the task fully with 1 being not at all confident and 7 being very confident?

- [Ask for any comments on the activity and/or ask if anything was particularly easy or difficult.]

- [Ask specific questions about what you noticed/noted down - may want to save this till the very end to avoid leading them for the next tasks.]

- Thank you so much for helping out today.

- Click the button to stop sharing your screen.

- I’m going to share my screen. On it you will see a list of adjectives. [share screen]

- Choose up to 3 words from the list that best represent how you feel about the website after this activity (see Table 2).

- Video: Creating a Custom Homepage

- Video: Homepage Advanced Customizations

- https://library.daytonastate.edu/index – search bar is Primo

- https://library.redlands.edu/home – search bar is Primo

- CSS Pseudo Classes (especially tree-structural)

- CSS Attributes Selectors

(After each task)

(At the very end)

Boring |

Exciting |

Ugly |

Busy |

Simple |

Intimidating |

Calm |

Familiar |

Old |

Complicated |

Fresh |

Professional |

Attractive |

Impressive |

Trustworthy |

Modern |

Innovative |

Reliable |

Unprofessional |

TABLE 2 Adjectives

I am stopping the recording now.

Appendix 3. Usability Study Script (Faculty Participants)

Script for Faculty

https://libguides.murraystate.edu/new

Thank you for coming to this session today. My name is David Sye. I am a librarian and will be the facilitator for this evaluation.

The University Libraries is currently working to improve the design of the Library’s website. As part of that process we have asked a few people to help evaluate the new design. To do this, I am going to ask you to work through doing a few things using the library’s new website to get your feedback on what you experience.

Any questions so far?

With your permission, I will be recording the session with audio and video.

The data from your session will be used to help us figure out how to improve the website and the findings may be published. None of the data will be seen by anyone except the people working on the project and your participation in this session will be anonymous.

I am going to send you the consent form in the chat window. Please take a few moments to review the consent form and let me know if you have any questions

[consent form]

We are ready to get started. The first thing to do is share your screen with me & I will start the recording. This is so I can watch what you are doing in the browser.

The share screen button should be bottom center of the Zoom screen

(CLICK THE RECORD BUTTON)

I am sending you another document in chat — https://docs.google.com/document/d/1EFpSzbpET9raMm4W0H7VIGg-yLMI-I00ZQRTq1x6_jY/edit?usp=sharing

Please read the “Thinking Aloud” explainer out loud to me.

[They read “Thinking Aloud” explainer]

As you are working, think out loud and describe what you are looking at, what you are looking for on the screen, anything that is confusing, anything that surprises you, or even things you wonder as you use the site. Keep up a running monologue as you use the site, like a stream of consciousness, and anything you say will be helpful.

I will give you the tasks one at a time. There is no need to rush.

Go as far as you would if you were alone. When you feel you have completed a task or you want to stop doing it at any point, please tell me. Say something like, “I am finished,” or “I want to stop this task here.”

Do you have any questions?

[Read through tasks]

(After each task)

(At the very end)

Boring |

Exciting |

Ugly |

Busy |

Simple |

Intimidating |

Calm |

Familiar |

Old |

Complicated |

Fresh |

Professional |

Attractive |

Impressive |

Trustworthy |

Modern |

Innovative |

Reliable |

Unprofessional |

TABLE 2 Adjectives

I am stopping the recording now.

Appendix 4. Coding Resources for Customizing Your LibGuides Homepage

Springshare Resources

(Log in to LibGuides to access Springshare resources.)

Using LibGuides CMS for Library Websites

This mini-site combines articles, videos, and resources for making libguides customizations.

Other LibGuides customization training videos not on the above mini-site:

Power Your Website with LibGuides CMS: Academic Library Examples

Non-Springshare Resources

Learn to Code – W3 Schools

Tutorials, references, and exercises for many programming languages. The How To page shows you how to build all kinds of web elements.

How do I embed a Primo search widget on my library webpage and how can I be sure that it’s accessible? From the SUNY Office of Library & Information Services. So much useful information here.

Resources for Developers, by Developers – MDN Web Docs

An open-source, collaborative project with learning resources for beginners. Documents Web platform technologies including CSS, HTML, JavaScript, and Web APIs.

W3C - Web Accessibility Initiative

https://www.w3.org/WAI/standards-guidelines/wcag/

WebAIM Contrast Checker

https://webaim.org/resources/contrastchecker/

Add this extension to your browser to aid in checking for accessibility issues. It doesn’t catch everything, and sometimes it flags things that are actually okay, but it is very helpful overall.